Part of my series countering common misconceptions in space journalism.

This blog is a follow on to my original post on Starlink. Starlink is an emerging high performance satellite-based internet routing network developed by SpaceX. Its ultimate purpose is to become the de-facto internet backbone provider, connect billions more people to the internet, and revolutionize access to space.

The usual disclaimers apply. I have no relevant inside knowledge of Starlink operations. I’m not an expert in networking, and unlike Starlink’s staff I haven’t spent years working only on this problem. In fact, I’m usually deeply confused at the best of times. But I had a cool idea and I wanted to share it.

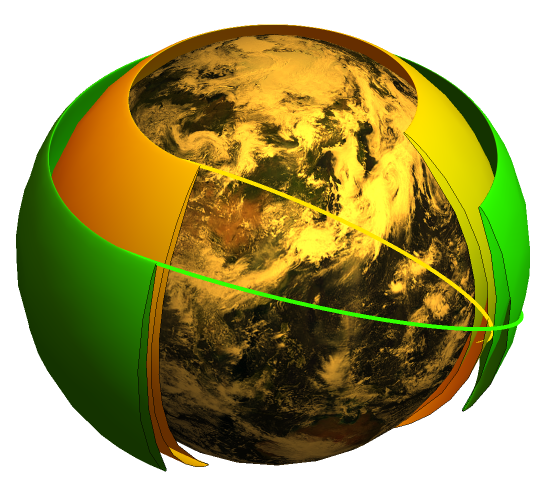

As of September 2020, the plan is for tens of thousands of Starlink satellites to orbit in about 9 separate low Earth orbits, each orbit with a different altitude and inclination. Each sub-constellation in a single orbit forms, in effect, a northward and southward comoving sheet of satellites that, when laser links are implemented, can communicate readily to local satellites. Thus, each orbit forms a “double cover” of the Earth up to some latitude.

Initially, Starlink satellites will communicate with each other via ground stations and user terminals. Later versions will implement laser links to other nearby satellites in the same orbit, permitting high data flows within each 2D data sheet. With nine separate orbit families, each having a north- and south-moving sheet, routing can get quite complicated.

Here’s a great video on some details of Starlink routing.

For the purposes of this blog, each unidirectional microwave or laser link can be thought of as a discrete channel linking many nodes, each of which functions as a router. Thus, each satellite is a routing node connecting dozens of incoming and outgoing data links. Each discrete channel would have self-contained error correction, encoding, and dynamic link quality assessment. The user terminals and high bandwidth gateways can also be thought of as nodes in the identical sense.

At the packet level, the question of Starlink routing boils down to “how does a packet traverse the network?” This question is complicated by the following factors, expanded in the rest of this blog:

- Satellites are moving, so links between nodes are constantly changing.

- Connections between satellites are ephemeral and subject to unexpected degradation, so packets may get lost.

- Ideally, operation is decentralized, private, and maximally agnostic, in addition to preserving low latency.

This is quite a wish list.

This blog focuses on the security and privacy aspects of the Starlink network. If these were not concerns it would be relatively trivial to perform some global simulation of the constellation, centrally calculate optimal routes, push that info out to the satellites themselves, and have them execute precomputed behavior. With TLS, the packet contents would be encrypted from end to end but, crucially, network topology and packet metadata would be exposed.

In many ways, internet metadata is more of a privacy concern than the packet contents themselves. The metadata encodes the graph, social and otherwise, of anyone on Earth. A unique, time-evolving fingerprint that often reveals more about a person than the packet contents.

Exposing metadata, even to SpaceX, is a bad idea. Intelligence agencies can use it to spy, while competitors can use it to undermine SpaceX’s technological advantages. If SpaceX has access to it, it is legally compelled to filter content in various jurisdictions, undermining freedom, net neutrality, and greatly increasing legal risk. Since SpaceX would probably prefer to spend money on engineers than lawyers, building a network that lacks access to metadata, for anyone, is a good idea. Such a network by default preserves privacy, ensures security for e-commerce, and discourages hackers from meddling.

The ideal for the network is that it function as a decentralized autonomous transparent communications medium with no side channel leakage, no persistence, minimal state, forward secrecy, and metadata protection, even in the case where the satellites themselves are compromised or logging data. Yes, the threat model has to include the possibility that SpaceX’s own hardware or software could misbehave. This makes the routing problem a variant of the Byzantine General’s problem, though blockchain is not required here.

Since the threat model has to include the possibility of radio or laser signals being intercepted by third party receivers, appropriately encrypted signals should be statistically indistinguishable from white noise.

While the Tor network was set up to provide some of these privacy-preserving features, it lacks the performance, reliability, functionality, and geographic connection necessary to work for Starlink. However, a well executed Starlink network should preserve privacy just as well as Tor. Starlink also has to provide maximum bandwidth, minimum latency, and minimal packet header overhead.

This blog won’t go into detail on the Border Gateway Protocol, the usual system the internet uses to pass packets between networks. That said, the geographic size and speed of the Starlink network does present some interesting challenges for operators of content delivery networks. I’m sure Cloudflare has thought about this!

Instead, I’m going to focus on the nuts and bolts of how packets might actually traverse the interior of the Starlink network without sacrificing speed or privacy.

How to actually make it work?

At its core, the question boils down to how a packet can traverse the network from ground terminal to multiple satellites to ground terminal, exploiting some kind of map between IP addresses, physical locations, and network topology, in a robust, decentralized way. What is the barest minimum quantity of logic that can do this?

In other words, how much contextual information can we obscure in a packet header and still have functionality?

The time scale for a packet to travel around the entire Earth is about a tenth of a second. On this time scale, the satellites move about half a mile, while a ground station at the equator would move about 50 meters due to the Earth’s rotation. To a good approximation, at the speed of light the satellites are not moving, so we don’t have to worry about packets getting splinched.

In order to find the right beam to the right destination ground station, a packet doesn’t need to know anything other than the rough GPS location of the destination ground station – two decimal places is plenty.

In principle, even this information can be obfuscated from nearly all the hardware on the packet’s path. All the hardware needs to know is which direction is fastest, and that can include any buffer backlogs on that particular router.

Each packet is encrypted and given a header by the origin SpaceX hardware which encodes the rough geolocation of the end point, determined either by caching or look up. This geolocation is split into varying pieces and encrypted using time- and space-evolving keys. These keys remain valid for, say, one second. Each satellite has a key that is updated continuously depending on the local time and the location of the satellite. Provided the time window is valid, a decryption operation unlocks 2 or 3 bits of salient detail, and no more. This is enough for the router to shunt a pointer in its buffer to the appropriate output channel in real time.

For example, a packet originates in Los Angeles destined for New York. The first satellite is connected to several thousand user terminals and a handful of gateways. It determines (only) that the packet is traveling north east and routes it in that direction. Even if it logs the information about the packet, all it will know is the time it arrived, how long it was, and which direction it went.

The last satellite in the chain is able to read the rough geolocation of the destination terminal, and assign the packet to the correctly-oriented beam. But it doesn’t know where the packet came from other than “south west”.

Every user terminal pointed at that satellite receives every data packet sent in that beam, but only the intended recipient will have the necessary keys to decrypt the packet, convert it back to regular internet traffic and either send it off on some ground-based fiber, into a server, or whatever.

Even if the packet is intercepted, within a second its geolocation keys expire and it is no longer distinguishable from white noise.

Even if an extremely well-motivated adversary was able to collect a log on every packet, performing a timing attack would require that each separate log’s clock offset be corrected. In practice, executing a zero-day on the target’s device is more likely.

In the end, such a scheme is not that different to how postal addresses work. Each step in the delivery only needs to know one line of the address, and typically only your local postal worker will have granular knowledge of names and addresses necessary to ensure that the right people get the right letters. A sufficiently zealous privacy-minded user could enclose their message in four separate envelopes each with the next line of the address, to be opened only on delivery to successfully more localized geographic regions.

Finally, I am certain that there is a countably infinite number of ways to implement real-time decentralized routing on a satellite internet network, and that optimality is not equivalent to being the first one I thought of. How would you like to see the system work?

Interesting ideas. I have posted a link to https://www.quora.com/q/istvjducvjlwiaat for anybody who is interested.

LikeLike

Can’t wait to get on starlink here in eastern PEI Canada. My whole family are Elon fans. Saving for our Tesla.

LikeLike

Well done, speaking of securing traffic, one could update keys to websites on the internet as Starlink is faster, less latency then ground traffic.

LikeLike

“Every user terminal pointed at that satellite receives every data packet sent in that beam”

Oh no no no, that sounds bad isn’t it, generally telcos avoid this problem by time and frequency modulation and providing a set of channels to each “terminal” where their data is sent and received, would that be possible for a fast moving “base station” in LEO.

LikeLike

Good point. I think I meant receives in an electrical sense if not a logical sense.

LikeLiked by 1 person

Makes sense, I am also interested in simulating such grid structure and communication — maybe a weekend project 😀 good writeup btw.

LikeLike

Please report back!

LikeLike

Also, any information on how starlink maybe latching or deregistering terminals at such speeds that also sounds like an interesting problem.

LikeLike

You’re assuming the network will operate like a traditional IP network and that is highly unlikely. The structure of starlink will likely be similar to an IP transport carrier that supports MVNO operations like most mobile operators. The underlying transport would likely be IPv6 based with IGP sub 200ms convergence. It’s likely adjacent satelites remain relatively adjacent for an IP perspective so MACSEC would be fast and effective. To ensure any Packet Gateway or BNG function can return traffic efficiently you would group satelites into approximately clusters that spanned a large state area let’s call this 20 satelites. Using RFC3107, each IGP can be interconnected but ones in the same area can filter router IDs that are in the same area towards ground stations. Gound stations then only need to see routes of nearby satelights and therefore access nodes. Like a mobile operator your access device handoff is done with a local IP fabric that is independent of the users PPP session that carries their telco session. If an MVNO wants to encrypt their customer traffic or provide lawful interception that’s completely independent of what Starlink needs to support. Obviously in most cases Starlink is the MVNO but that does not exclude them supporting third party operators.

LikeLike

I’m going to have to Google some acronyms, and if you write a blog on this I will link to it!

LikeLike

You missed a complicating factor – the traffic demand on the network is highly uneven. Satellites over New York City, for example, can expect magnitudes more load than satellites over the Southern Ocean.

Also, you can learn a lot about how satellite networks work by looking at cellular networks on Earth. Many of the same problems and solutions are applicable. This security issue is not unique to Starlink, nor to satellite communications.

LikeLike

Here is a paper on package routing algorithms (orbital and ground) developed for a similar problem in one startup project called Yaliny few years ago in Russia:https://doi.org/10.2298/YJOR181015034B

LikeLike

This reads like a good beginning of a new series! Staying focused on the fundamentals is the key, be it implemented like MVNOs or using existing RFCs “as is” is not the way SpaceX operates in general. Any “machine” that Elon operates, has multiple buttons that can be pressed at different times to achieve multiple technological or economic outcomes. If it is advantageous to “reinvent the wheel”, his companies will do so.

LikeLiked by 1 person

I have some thoughts on sending packets across the constellation.

Assuming that it is practical to save the orbital parameters of all satellites on each one allowing it to calculate the instantaneous positions, Dijkstra’s algorithm can calculate the least-node path to the destination and send the packet to the next satellite. The next can repeat until it goes to the ground hub or terminal.

The satellites and recepient are stationary for practical purposes because switching is fast and communications happen at light speed.

There is nothing particularly difficult about such an algorithm.

LikeLike

I think recomputing the globally optimal path for all packets might be a bit resource intensive.

LikeLike

Dijkstra’s algorithm adapted for a Euclidean metric is blindingly fast and is used by video games such as Sim City to compute the path of every agent travelling across the map. It is also compact. Calculating instantaneous positions of the satellites is standard orbital mechanics, and should also be compact and fast. The problem is the orbital parameters for the constellation, but that should be a few tens of MB for the constellation.

Dynamically, the algorithm needs to identify satellites capable of communicating with each other, and feed links between them into the algorithm. It may be enough to restrict the maximum distance between acceptable links.

I don’t see any of this as particularly onerous, and it is better than having paths computed on the ground.

LikeLike

I think you are talking about the ospf protocol, but the ospf protocol only supports about 200 nodes in a convergence zone. Exceeding this number will cause a lot of routing flooding and slow convergence. Starlink has tens of thousands of satellites, and there will be problems with using ospf here.

LikeLike

My interpretation of Starlink’s routing plan is that each satellite has exactly two lasers, one always trained on the next satellite leading in the same orbit, the other at the trailing satellite; and two telescopes, likewise.

Thus, each arriving packet comes in by one route and leaves by one of exactly two routes. For most packets, the choice is easy: if there is a ground station in range, the packet goes down. If not, it goes to another satellite in the direction to the nearest ground station. Normally a packet makes no more than one or two laser hops.

For a select few packets identified with *very high-paying* subscribers (based primarily in New York, London, Tokyo, Hong Kong), it goes to the next satellite in the direction of the ultimate destination unless that is immediately below. Those select subscribers are paying to get a few milliseconds’ jump on traffic dawdling in fiber on the ocean floor, to arrive only after the smart money has already acted on intelligence arriving via Starlink.

Starlink could collect extra revenue by lofting their satellites with a few tens of TB of storage, and storing onboard the latest popular Disney, Netflix, and Prime episodes, or any other heavily edge-replicated data, for proxied “last-megameter” delivery.

LikeLike

Two misconceptions. Dedicated fiber will always be faster then satellites. Fiber was installed in the ground to service an expected customer base, for example the financial centers between London and New York.

Proxy caching is extremely unlikely. Caching is a function of servers which are very complicated and expensive. Routers and switches are cheaper. I doubt that a satellite can be augmented without adding lots of mass and cost.

Proxy caching is usually an action done to supply latency in the under 10ms and usually a lot less. Content providers are going to look for density before they even consider an action. Some will not even bother unless they know there is a 100G to cache at peak.

LikeLike

>> Dedicated fiber will always be faster then satellites.

The speed of light in optic fibre is typically 30% slower than in a vacuum. The path taken through the constellation’s satellites is slightly longer than that of a dedicated cable, but will still be quicker.

LikeLiked by 1 person

What did I tell you?

https://www.teslaoracle.com/2021/01/25/spacx-record-143-satellites-laser-starlinks/

“Musk has confirmed on Twitter that the black pipe-type objects at the end of each Starlink satellite are actually laser links” — count ’em, two.

LikeLiked by 1 person

And of course it is now impossible to add any extra lasers at any point in the future since the photo is already out and your conclusion is already drawn.

LikeLike

“When my information changes, I change my mind. What do you do?”

LikeLike

This new 2022 breakthrough algorithm is a potential gamechanger for Starlink packet routing

https://www.quantamagazine.org/researchers-achieve-absurdly-fast-algorithm-for-network-flow-20220608/

LikeLike

Very cool, at least as long as Starlink continues to solve the problem globally.

LikeLike

Let’s hope it can be implemented and pushed via a software update to the satellites. Otherwise, if it requires new hardware, we’ll have to wait for Starlink v3.0 generation of satellites, since v2.0 hardware is probably already finalised.

That’s assuming the algorithm doesn’t get patented.

Algorithm patents are very frustrating and hold back entire industries.

LikeLike