Part of my series countering misconceptions in space journalism. Now available in audio form.

Starlink, SpaceX’s plan to serve internet via tens of thousands of satellites, is a staple in the space press, with articles appearing every week on the latest developments. The broad schema is clear and, thanks to filings with the FCC, a sufficiently well motivated individual (such as your humble servant) can deduce a great deal of detail. Despite this, there is still an unusually high degree of confusion around this new technology, even among expert commentators. It is not uncommon to read articles comparing Starlink to OneWeb and Kuiper (among others), as though they were all equal competitors. It is not uncommon to read of well-meaning concerns regarding space junk, space law, regulation, and harm to astronomy. It is my hope that by the end of this rather lengthy post, the reader will be both better informed and more excited by Starlink.

My previous post on Starship struck an unexpected chord with my ordinarily sparse readership. In it, I explained how Starship would greatly lengthen SpaceX’s lead over competing launch providers and, at the same time, provide a mechanism for the redevelopment of space. The subtext here is that the conventional satellite industry was unable to keep up with SpaceX’s steadily increasing capacity and decreasing costs on the Falcon family of launchers, leaving SpaceX in a difficult position. On the one hand, it was saturating a market worth, at most, a few billion a year. And on the other, it was developing an insatiable appetite for cash to build an enormous rocket with almost no paying customers, and then fly thousands of them to Mars for no immediate economic return.

The answer to these twin problems is Starlink. By developing their own satellites, SpaceX could create and define a new market for highly capable, democratized space communication access, provide a revenue stream and payloads for their own rocket even as they self-cannibalized, and eventually unlock trillions in economic value. Do not underestimate the scale of Elon’s ambition. There are only few trillion dollar industries in existence: energy, high speed transport, communications, chemicals, IT, healthcare, agriculture, government, defense. Despite common misconceptions, space mining, lunar water, and space-based solar power are not viable businesses. Elon has a play in energy with Tesla, but only communications provides a reliable, deep market for satellites and launch.

Elon Musk’s first space-related idea was to spend $80m on a philanthropic mission to grow a plant on a Mars lander. Building a Mars city will cost maybe 100,000 times as much. Starlink is Elon’s main bet to deliver the ocean of gold needed to philanthropically build a self-sustaining city on Mars.

Why?

I have been planning some version of this post for a very long time but until last week, I didn’t quite have all the pieces in place. Then SpaceX President Gwynne Shotwell gave an incredible interview with Rob Baron, covered by Michael Sheetz for CNBC in a glorious Twitter thread and a couple of articles. This interview cast into sharp relief the difference in approach between SpaceX’s take on communications satellites and everyone else.

Starlink was born conceptually in 2012 when SpaceX realized that its customers, primarily comsat providers, had better margins than they did. Launch providers charge famously unreasonable rates to place satellites in orbit, and yet somehow there was a piece of the action that they had missed? Elon dreamed of an internet constellation and, unable to resist a near-impossible technical challenge, got the ball rolling. The Starlink development process has had its difficulties but by the end of this post you, the reader, will probably be surprised just how few difficulties there were, given the magnitude of the underlying vision.

Why do we need an enormous constellation of satellites to provide internet? Why now?

In just my lifetime the internet has grown from an academic curiosity to the single most transformational piece of infrastructure ever built. This isn’t the topic for an extended discussion of the internet, but I will assume that global demand for internet and the wealth it brings will continue to rapidly grow by about 25% a year.

But today, almost all of us get our internet from a tiny handful of geographically-isolated monopolies. In the US, AT&T, Time Warner, Comcast, and a handful of smaller players have divided up the country to avoid competition, charge exorbitant rates for bad service, and bask in near universal hatred.

There is a compelling reason, besides overwhelming greed, for anti-competitive behavior among internet service providers. The underlying infrastructure of the internet, microwave cell towers and optical fiber, are extremely expensive to build. It’s easy to forget just how miraculous the data-transfer properties of the internet are. My grandmother’s first job was as a Morse code operator during the Second World War – a medium that competed with homing pigeons for preeminent strategic value! For most of us, riding the information superhighway is so disembodied, so incorporeal, that we forget that those bits have to traverse our physical world with all its borders, rivers, mountains, oceans, storms, natural disasters, and other annoyances. Neal Stephenson wrote the definitive essay on cybertourism when the first internet-dedicated oceanic optical fiber cable was laid, all the way back in 1996. His characteristic sharp prose ably describes the sheer cost and difficulty of building these wretched lolcat pipes. For much of the 2000s, so much cable was being laid that the rate of deployment, combined across multiple ships, was supersonic.

At the time I worked in a optics lab and (IIRC) we demonstrated a then record-breaking multiplexing record of 500Gb/s. Limitations in electronics meant that each fiber was carrying something like 0.1% of its theoretical maximum capacity. 15 years later, we’re approaching those limits. Beyond a certain point, transmitting more data down a given fiber will melt it, and that time is not too far off.

What we need is a way to lift the data flow off Earth’s tumultuous surface and into space, where a satellite can effortlessly circle the Earth 30,000 times in 5 years. This seems obvious – so why hasn’t someone tried it before?

The Iridium constellation, invented and deployed by Motorola (remember them?) in the early 1990s was the first global LEO-based communications network, as told engagingly in this book. By the time it was deployed, its niche capability to route small data packets from asset trackers turned out to be its main application, as cellular telephones became cheap enough that satellite phone usage never took off. The Iridium network had 66 satellites (plus a few spares) in 6 distinct orbital planes – the minimum number necessary to completely cover the globe.

If 66 satellites is enough for Iridium, why is SpaceX planning tens of thousands? What’s different about SpaceX?

SpaceX came to this business from the other direction, from launch. As a result of their pioneering efforts in booster recovery, they have completely cornered the market in cheap launch. There’s not much money to be made in excessively undercutting their competition, so the only way to exploit their excess capacity is to become their own customer. Because SpaceX can launch their satellites for about a tenth of the price (per kg) of the original Iridium constellation, they’re able to address a substantially more inclusive market.

Starlink’s world-spanning internet will bring high quality internet access to every corner of the globe. For the first time, internet availability will depend not on how close a particular country or city comes to a strategic fiber route, but on whether it can view the sky. Entrepreneurs the world over will have unfettered access to the global internet irrespective of their own variously incompetent and/or corrupt government telco monopolies. Starlink’s monopoly-breaking capacity will catalyze enormous positive change, bringing, for the first time, billions of humans into our future global cybernetic collective.

If I may digress for a paragraph, what does that even mean?

For people growing up today in an era of ubiquitous connectivity, the internet is like the air we breathe. It’s just always there. But that is to forget its enormous power for bringing about positive change – a change we’re currently in the middle of. The internet is able to help people hold leaders to account, communicate with people in other places, share ideas, invent new things, and unify the human race. The history of modernity is one of increased capacity for human data sharing. First, through speeches and epic poetry. Then writing, which enables the dead to speak to the living, data storage, and asynchronous communication. The printing press, which enabled the mass production of information. Electronic communication, which sped the passage of data across the world. Our personal note keeping devices became steadily more sophisticated from notebooks to cell phones, each of which is a internet-connected computer festooned with sensors and increasingly capable of intelligently predicting our needs.

A human capable of using writing and computers as a cognitive aid is significantly more capable of transcending the limitations of their imperfectly evolved wetware. What’s more exciting is that in cell phones humans have both a powerful note keeping device and also a mechanism for sharing thoughts with other humans. Whereas previously humans may have relied on speech to share the ideas they’d jotted in their notebooks, the norm today is for the notebooks to share the ideas that humans have generated – an inversion of the traditional schema. The logical extension of this process is a form of collective metacognition, mediated by personal devices ever-more-closely integrated with our brains and each other. While there is space in this world to be nostalgic for the diminution of our connection to nature and the loss of solitude, it is important to remember that technology, and technology alone is responsible for the vast majority of humanity’s emancipation from the “natural” cycles of ignorance, premature avoidable death, violence, malnutrition, and tooth decay.

How?

Let’s talk about the Starlink business case and design architecture.

For Starlink to be a good business, its revenue needs to exceed its cost of construction and operation. Traditionally, satellite businesses have front-loaded costs in capex, exploiting sophisticated specialized financing and insurance mechanisms to launch a satellite. A geostationary communications satellite might cost $500m and take 5 years to build and launch, so the industry operates similarly to jet or container ship construction. Enormous outlays and just enough revenue to cover financing costs, with a comparatively cheap operating budget. In contrast, the primary downfall of the original Iridium constellation was that Motorola forced the operator to pay ruinous licensing fees, bankrupting the whole endeavor within months.

To run these businesses, traditional satellite companies have had to serve specialty customers and charge high rates for their data. Airlines, remote outposts, ships, war zones and critical infrastructure pay around $5/MB, which is 5000 times higher than the cost for a traditional ADSL connection, despite the poor latency and relatively low bandwidth of a satellite link.

Starlink intends to compete with commercial terrestrial ISPs, so has to be able to deliver data for less, ideally much less, than $1/GB. Is this possible? Or rather, since it is possible, we may ask: How is this possible?

The first ingredient in the mix is cheap launch. Falcon currently sells launches for about $60m for up to 24 T, which works out to be $2500/kg. This is a lot more than their internal marginal cost, however. Starlink satellites are to be launched on multiply reflown boosters, so the marginal cost per launch is the cost of a new second stage (perhaps $4m), fairings ($1m) and ground handling (~$1m). This works out to be around $100k per satellite, more than 1000 times cheaper than a conventional comsat launch.

Most of Starlink will be launched on Starship, however. Indeed, the evolution of the Starlink constellation as evidenced by updated FCC filings gives us some insight into how the internal architecture evolved as Starship became real. The total number of satellites in the constellation increased from 1584 to 2825 to 7518 to 30,000. Or, if you believe the total accumulates, even more. The minimum viable number of satellites for the first phase of development is 6 planes of 60 (360) with 24 planes of 60 (1440) required for total coverage within 53 degrees of the equator. 24 launches on Falcon may cost as little as $150m internally. In contrast, Starship is designed to launch up to 400 satellites at a time, and for a similar cost per launch. Starlink satellites are intended to be replaced after five years, so 6000 satellites require 15 Starship launches a year. This could cost as little as $100m/year, or $15k per satellite. While each of the Falcon-launched satellites weigh 227 kg, the Starship-launched satellites could weigh as much as 350 kg while carrying third party instruments, and be somewhat larger without exceeding the capacity of the launcher.

What do the satellites cost? As satellites go, the Starlink sats are somewhat unusual. Built, stacked and launched in a flat configuration (stackellites?), they are exceptionally easy to mass produce. As a rule of thumb, the manufacturing cost target should be about the same as the unit launch cost. A big cost disparity would be a sign of misallocated engineering resources, since the all up marginal cost improvement to savings on the cheapest part are so small. Does $100k per satellite for the first few hundred sound reasonable? In other words, is a Starlink satellite of similar complexity to a car?

To answer this question completely, we need to understand why a geostationary comsat could cost a thousand times as much, despite not being a thousand times as complicated. More generally, why does space hardware cost so damn much? There are many reasons for this, but the most salient in this case is that if the geostationary launch (pre Falcon) costs more than $100m, the satellite needs to reliably operate for many many years to make any money. Ensuring a high probability of operability on the first and only article is a painful, drawn out process that takes years and involves hundreds of people. Costs add up and it’s easy to justify additional processes if the launch is that expensive anyway.

Starlink, on the other hand, breaks this paradigm by building hundreds of satellites, iterating quickly on early design flaws, and employing the techniques of mass production to control costs. I personally have no difficulty imagining a Starlink production line where a tech can integrate and zip tie (NASA-grade, of course) everything together in an hour or two, maintaining the necessary replacement rate of 16 satellites per day. A Starlink satellite contains many fancy parts but I see no reason why total production costs couldn’t fall to $20k by the thousandth unit off the line. Indeed, in May Elon tweeted that satellite production cost was already less than launch cost.

Let’s pick an intermediate case to analyse “time to revenue”, using round numbers. A singular Starlink satellite costing $100k to build and launch is operated for five years. Will it pay for itself, and how quickly?

In five years, a Starlink satellite will perform 30,000 orbits. In each of these 90 minute orbits, the satellite will spend most of its time over the uninhabited ocean, and perhaps only 100 seconds over a densely populated city. During that brief window, it can transmit data and earn revenue as fast as it can. Assuming the antenna can support 100 separate beams, and each beam can transmit at 100MB per second using advanced coding such as 4096QAM, the satellite generates $1000 of revenue per orbit, assuming a subscriber cost of $1/GB. This is sufficient to earn the $100k deployment cost in only a week, greatly simplifying the capital structure. The remaining 29,900 orbits are profit, once fixed costs are accounted for.

Obviously these assumptions can vary a lot, in either direction. But in any case, being able to deliver a competent communications constellation to LEO for $100k, or even $1m, per unit offers a substantial business opportunity. Even taking into account its ludicrously low usage fraction, a Starlink satellite can deliver 30 PB of data over its lifetime at an amortized cost of $0.003/GB, with practically no marginal cost increase for transmission over a longer distance.

To understand how compelling this model is, let’s briefly compare it to two other models for consumer data delivery: conventional optical fiber-based, and a satellite constellation offered by a company that doesn’t specialize in launch.

The SEA-WE-ME 4 is a major submarine cable running from France to Singapore, commissioned in 2005. It is capable of transmitting 1.28Tb/s, and cost about $500m to deploy. If it operates for 10 years equivalent 100% capacity, with a 100% overhead for capital costs, then the price per bit works out to be $0.02/GB. Transatlantic cables are shorter and a bit cheaper, but the undersea cable is just one entity in a long line of people who need money to deliver data. The middle of the road estimate for Starlink is 8 times cheaper, all in, than just the undersea cable.

How is that possible? Each Starlink satellite includes all the complicated electronic switching gear found linking optical fibers together, but instead of an expensive and vulnerable cable to carry data, they simply use empty vacuum. Transmitting data through space dis-intermediates all the comfortable moribund monopolies and allows consumers to connect to each other with even less hardware in between.

Let’s contrast that to a competing constellation developer, OneWeb. OneWeb plans a constellation of around 600 satellites to be launched with a range of commercial providers for around $20,000/kg. Each satellite will weigh 150 kg, implying a best case unit launch cost of around $3m. Satellite hardware costs are quoted to be $1m each, for a total constellation development cost of $2.6b by 2027. The OneWeb test satellites have demonstrated a peak data rate of 50 MB/s, ideally per each of the 16 beams. Following the same procedure as the Starlink calculation above, we find that each OneWeb satellite generates $80/orbit revenue and a total of $2.4m in 5 years, perhaps just covering launch cost when data services to remote areas are included. This works out to be about $1.70/GB.

Gwynne Shotwell was recently quoted as saying that Starlink is 17x cheaper or faster than OneWeb, which would imply a comparative cost of $0.10/GB. This refers to the cost of delivering data in Starlink’s initial configuration, with less optimized production, Falcon launch, and limited data offerings only in the northern USA. It turns out that SpaceX has an insurmountable advantage over the competition: they can launch a more capable satellite, today, for 15 times less per unit. Starship will increase this to a factor of 100 or more, indeed it is possible to imagine SpaceX launching 30,000 satellites by 2027 for a total cost of less than $1b, most of which will be self-funded by that point.

I’m sure there’s an analysis that is less bleak for OneWeb and other hopeful constellation developers, but I’m not quite sure how it would be structured.

Morgan Stanley recently estimated that Starlink satellites would each cost $1m to build and $830k to launch, a figure that Gwynne Shotwell described as “waaaaayyyy off”. I find it interesting that the estimated costs are similar to our guess for OneWeb, but roughly 10 times the estimate for the initial version of Starlink. Use of Starship and satellite mass production could lower satellite deployment costs to around $35k per unit, an astonishingly cheap figure.

One final point is to compare the revenue per Watt of solar power generated for Starlink. Each satellite’s solar array is about 60 sqm according to photographs on the website, which means that they generate an average of around 3kW, or 4.5kWh, over an entire orbit. With a ballpark estimate of $1000 of revenue per orbit, each satellite is generating about $220/kWh. This is 10,000 times the wholesale cost of solar-generated electricity, once again demonstrating that space-based solar power is a losing proposition. Modulating microwaves with data is an enormous value-add!

Architecture

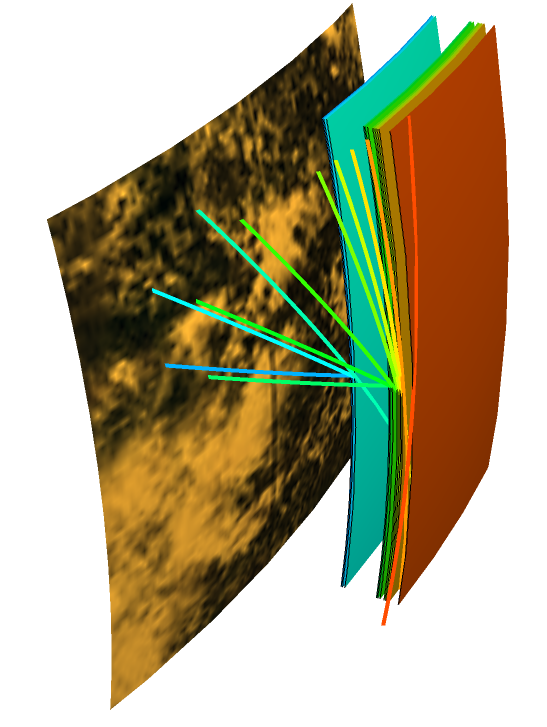

In the previous section, I glossed over a substantially non-trivial part of the Starlink architecture; the manner in which it deals with humans’ decidedly non-uniform population density. Each Starlink satellite can produce focused beams which produce spots on the surface of the Earth. All subscribers within one beam spot have to share bandwidth. The size of this spot is determined by fundamental physics – essentially its width is (satellite altitude x microwave wavelength/antenna diameter), which for Starlink satellites works out to be, at best, a few kilometers.

Most cities have a population density of around 1000 people/sqkm, though some are much higher. In parts of Tokyo or Manhattan, there could be more than 100,000 people within a single beam’s footprint. Fortunately, in all such high density cities there is a competitive broadband wholesale market, not to mention highly developed mobile phone networks. Further, in the case where a highly developed constellation has multiple satellites overhead at any one time, the data rate can be increased by spatial separation as well as frequency allocation. In other words, dozens of satellites could point their most powerful beam at the same area, and subscribers in that area would use ground terminals that would split the demand between those satellites.

While remote, rural, and suburban areas will provide the most relevant market for the initial stages of the constellation, further launches will be funded entirely by providing better service to high density cities. This is the opposite to the more typical market expansion scenario, where a competitive city-based service inevitably suffers declining margins as it attempts to grow outwards into poorer, more sparse regional areas.

When I first ran these numbers years ago, this was the best population density map I could find.

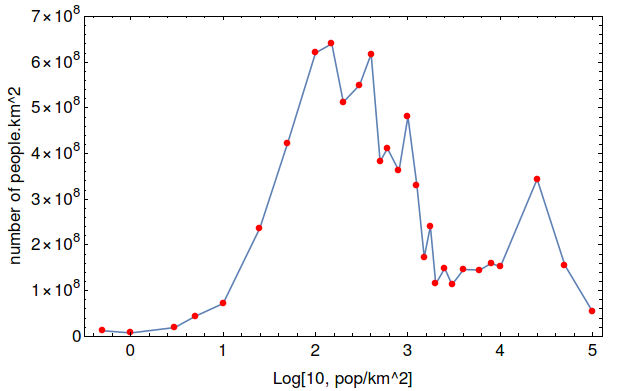

I scraped the data out of this image to produce the following three graphs, each a moment of the underlying data. The first shows frequency of Earth area by population density. The most salient point is that most of Earth’s area has no humans at all, while almost none has more than 100 people/sqkm.

The second shows the frequency of humans by population density. This shows that although most of the Earth is unpopulated, most of humans live in areas with between 100 and 1000 people/sqkm. The extended nature of this peak (over an order of magnitude) reflects an underlying bimodality in urbanization patterns. 100 people/sqkm represents a relatively sparse rural environment, while 1000 people/sqkm is more typical of suburbia. Urban centers can easily support 10,000 people/sqkm, while Manhattan’s population is 25,000 people/sqkm.

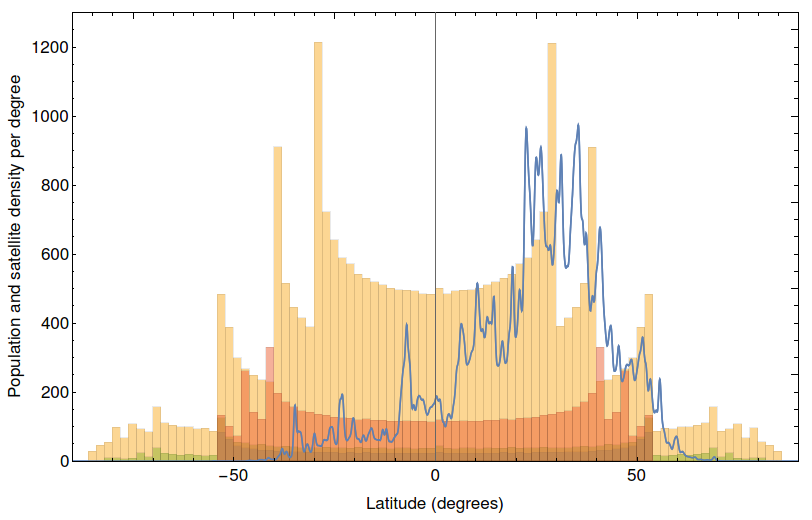

The third graph shows population density by latitude. Here we see almost all humans clustered between 20 and 40 degrees North. This is largely an accident of history and geography, since so much of the global South is ocean. Still, such a population density presents a daunting challenge for constellation architects, as satellites spend equal time in each hemisphere. Further, a satellite orbit at an inclination of, say, 50 degrees will spend more time towards the extremes of that latitude than the intermediate areas. This is why Starlink can serve customers in the northern US with only 6 planes filled, while 24 orbital planes are needed to fully cover the Earth’s equator.

Indeed, if you overlay the population density graph with a satellite density graph, the choices of orbits become very clear. Each of the histograms represents one of SpaceX’s four filings with the FCC. I personally believe that in practice each filing supersedes the previous one, but in any case it is easy to see how additional satellites add data capacity over the needed regions in the northern hemisphere. Conversely, substantial underutilized capacity exists in the southern hemisphere, which is great news for my native Australia.

Once the data has made it from the subscriber to the satellite, what happens to it? In the initial version of Starlink, satellites relay this data immediately back to dedicated ground stations near the areas of service. This configuration is called “bent pipe”. In future, Starlink satellites will add the capability to communicate between each other using lasers. Satellites over dense cities will be at peak capacity, but data can diffuse through the laser network over two dimensions. In practice this means that there is enormous latent backhaul capacity in the satellite network, so subscriber data can be “regrounded” anywhere that suits. In practice, I anticipate that SpaceX ground stations will be collocated with carrier hotels outside of urban areas.

It turns out that satellite to satellite communication is non-trivial in cases where the satellites are not comoving. The most recent FCC filing gives 11 distinct orbit families of satellites. Within a given family, surrounding satellites are moving at the same altitude, inclination, and eccentricity meaning that the laser links can track the immediately surrounding satellites relatively easily. But between families the closing speeds are measured in km/s so interfamily-communication, if any, must be conducted with short-lived rapidly steered microwave links.

The topology of these orbit families is super esoteric and not hugely relevant to the business case, but I find them beautiful so I’m going to include them. If you’re not that excited by this section, skip to “Fundamental physical limits” below.

A torus, or donut, is a mathematical object defined by two radii. It is fairly trivial to draw circles on the surface of a torus, either parallel or perpendicular to the shape. It may interest you to discover that there are two other families of circles that can be drawn on the surface of a torus, both passing through the central hole and around the exterior of the torus. This construction is called the Villarceaux circles, and I used it when I designed the toroid for the 2015 Burning Man tesla coil Coup de Foudre.

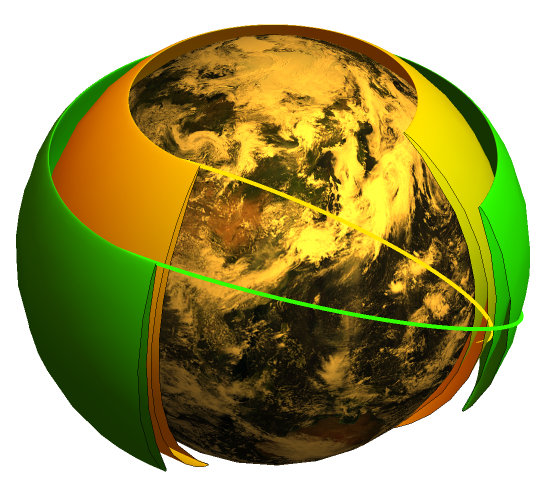

Although orbits are in general ellipses, not circles, a similar construction applies in the case of Starlink. A set of 4500 satellites in a series of orbital planes, all at common inclination, form a continuously moving sheet over the surface of the Earth. The northward moving sheet turns around at a given latitude and moves south once more. To avoid collisions, the orbits will be very slightly eccentric, such that the northward moving sheet will be a few kilometers higher (or lower) altitude than the southern moving sheet. Together, the two sheets form a blown out torus shape, as shown in this exaggerated diagram.

Recall that, within this torus, communication is between adjacent comoving satellites. In general, there are no long-lived communication links directly between satellites in the northward moving and southward moving sheets, as their closing speeds are too high to accurately track with a laser. The path for data transmission between sheets, then, passes around the top or bottom of the torus. More detail on satellite links.

In the aggregate, 30,000 satellites will be positioned in 11 nested tori with a wide gap around the orbit of the International Space Station! This diagram shows how all these sheets stack up, without exaggerated eccentricity.

Finally, it is worth considering optimal flight altitude. There is a trade off between low altitude, which allows smaller beam sizes and higher data rates, and high altitude, which allows fewer satellites to cover the whole Earth. Over time, SpaceX’s FCC filings have trended towards lower altitudes, especially as Starship’s development makes the rapid deployment of larger constellations possible.

There are other advantages to low altitude, including greatly reduced risk of collision with debris or negative consequences of hardware failure. Due to increased atmospheric drag, the lowest flying Starlink satellites (330 km) will burn up in a matter of weeks after loss of orientation control. Indeed, 330 km is lower than nearly all other satellites, and maintaining altitude will require use of the built-in Krypton electric thruster, as well as a streamlined design. In principle, a sufficiently pointy electrically propelled satellite could orbit stably as low as 160 km, but I don’t expect SpaceX to fly that low when there are a few other data rate-increasing tricks to try.

Fundamental physical limits

While it seems unlikely that satellite deployment costs will ever drop much below $35k, even with advanced automated manufacturing and fully reusable Starships, the ultimate physical limits on satellite performance are less clear. The analysis above assumed a peak data capacity of around 80Gbps, based on the round numbers of 100 beams each capable of delivering 100MB/s.

The ultimate limit on channel capacity is set by the Shannon-Hartley theorem, and is given by bandwidth times log(1+SNR). Bandwidth is often limited by available spectrum, while SNR is restricted by available power on the satellite, background noise, and channel cross talk due to imperfect antennas. Another salient limit is processing rate. The latest Xilinx Ultrascale+ FPGAs have GTM serial capability up to 58Gbps, which is a good estimate for current limits on channel data capacity without developing custom ASICs. Even then, 58Gbps would require a substantial frequency allocation, most likely in the Ka or V bands. The V band (40-75GHz) has more available cycles, but suffers more atmospheric absorption especially in areas with high humidity.

Are 100 beams practical? There are two aspects to this problem: beam width and phased array antenna element density. Beam width is determined by the wavelength divided by the antenna diameter. A digital phased array antenna is still specialized technology, but maximum practical sizes are determined by the width of a reflow oven (around 1 m) since using RF interconnects is asking for trouble. The Ka band wavelengths are around a centimeter, implying a beam width of 0.01 rads at full width half maximum. Assuming a useful beam solid angle of 1 steradian (similar to the field of view of a 50mm camera lens), around 2500 distinct beams can be fit in this area. Linearity implies that 2500 beams would require at least 2500 antenna elements within the array, which is possible though not easy. It may also get rather warm when used!

All up, 2500 channels each supporting 58 Gbps is a staggering quantity of data, roughly 145 Tbps. For comparison, total internet traffic in 2020 is predicted to average 640 Tbps. This is good news for people worried about inherently low satellite bandwidth. If the 30,000 satellite constellation is active by 2026, global internet traffic may reach 800 Tbps. If 50% of this is served by the ~500 satellites over densely populated areas at any one time, then each satellite will have a peak data rate of about 800 Gbps, ten times higher than our original basic estimate, and thus earning potentially ten times the revenue.

For a satellite in a 330km orbit, a 0.01 radian beam covers an area of 10 sqkm. Exceptionally densely populated areas such as Manhattan might have 300,000 people in this area. If they are all streaming Netflix (7 Mbps in HD), the total data demand is 2000 Gbps, roughly 35 times the current hard limit imposed by FPGA serial outputs. There are two ways around this, one of which is physically possible.

The first is to launch enough satellites that there are more than 35 in the sky over the high demand areas at any one time. Using 1 steradian again for a reasonable addressable sky patch and 400km for an average orbital altitude, this implies a satellite density of 0.0002/sqkm, or 100,000 if evenly spread across the globe. Recall that SpaceX’s chosen orbits boost density over the highly populated 20-40 degrees of latitude and 30,000 satellites starts to seem like a magic number.

The second idea is much cooler but sadly not possible. Recall that beam width is determined by antenna array width. What if multiple antenna arrays on different satellites combined their powers to synthesize a narrower beam, just like radio telescopes such as the Very Large Array? This method is fraught with difficulty because baselines between satellites would have to be accurately tracked to sub-millimeter precision, in order to stabilize beam phase while the satellites move around. And even if this were possible, the resulting beam would have extremely poorly constrained side lobes due to a lack of satellite density in the sky. On the ground, the satellite beam width could be narrowed to a few millimeters in size (it could track a cellphone antenna), but there would be millions of them due to poor intermediate nulling. This failure is a manifestation of the Thinned-Array Curse.

It turns out that separating channels by angular separation as the satellites are spread over the sky delivers adequate improvements in data rate without breaking the laws of physics.

Use cases

What is the customer profile for Starlink? The default use case is hundreds of millions of suburban subscribers with a pizza box-sized antenna on their roof, but substantial opportunities exist for other streams of revenue.

In remote and rural areas, ground stations won’t need phased array antennas to maximize bandwidth, so smaller user terminals are possible. These range from IoT asset trackers to pocket-sized satellite phones, emergency beacons, or scientific animal tracking equipment.

In densely populated urban environments, Starlink can provide primary and backup backhaul for cellular networks. Each cell tower can have a high-performance ground station mounted to the top, but exploit ground-supplied electricity for amplification and transmission over the last mile.

Finally, even in congested areas during initial roll out, there is a use case for the exceptionally low latency offered by VLEO satellites. Financial firms are willing and able to pay top dollar to get vital information just a bit quicker from every corner of the globe. Even though the path taken by Starlink data is longer due to the hop into space, the vacuum speed of light is about 50% faster than in glass, more than making up for the difference over longer distances.

Impact

The final section of this blog concerns impacts. While this blog is intended to address misconceptions across the spectrum, potential impacts are some of the most contentious. It is my intention here to provide information without editorializing too much. I don’t have a crystal ball, nor any inside information at SpaceX.

The most salient impact for me is increased access to internet. Even my native Pasadena, a vibrant technical city of a million people that is home to several observatories, a world-class university, and the largest NASA center, has very limited options when it comes to internet supply. Across the US and the rest of the world, internet providers have become rent-seeking utilities, hoping to skim off their $50 a month in a cozy, uncompetitive environment. Arguably, any commodity that comes out of the wall of houses is a utility, but internet service quality is less uniform than water or electricity or gas.

The problem with the status quo is that, unlike water, power, and gas, the internet is still young and growing rapidly. We’re still changing the ways we use it. The most transformative use of the internet hasn’t even been invented yet. But “bundled” internet plans choke the possibility of competition and innovation. Billions of people are being left behind by the digital revolution for no better reason than an accident of birth, or their country being a long way from a major undersea cable. In large parts of the world, internet is still provided by geostationary satellite at prohibitive cost.

Starlink, continuously spraying bits from the sky, disrupts this model completely. I don’t know of a better way to bring the unconnected billions online. SpaceX is on the way to becoming an internet service provider and, potentially, an internet company to rival Google and Facebook. I bet you didn’t see that coming.

It’s not obvious that internet satellites are the way to go. SpaceX, and only SpaceX, is in a position to rapidly build out an enormous internet constellation, because only SpaceX had the vision to spend a decade struggling to break the government-military monopoly on space launch. Even if Iridium had beaten cell phones to market by a decade, it could never have achieved wide adoption using conventional launch providers. Without SpaceX and its unique business model, there’s a good chance that global satellite internet simply never happens.

The second major impact is to astronomy. After the launch of the first 60 Starlink satellites there was an outpouring of criticism from the international astronomy community concerned that greatly increased numbers of satellites would ruin their access to the night skies. There is a saying that the quality of the astronomer is directly proportional to the size of their telescope. Without exaggeration, performing astronomy in the modern era is a super tough job, a constant arms race between improving analysis and steadily increasing light pollution and other noise sources.

The last thing any astronomer wants is thousands of bright satellites shooting across the frame. Indeed, the first Iridium constellation was somewhat notorious for its production of “flares” due to large, mirrored panels that could reflect the sun’s light over narrow patches of the Earth. At times, they could be as bright as a quarter moon, and even occasionally damaged sensitive astronomical sensors. There are also valid concerns about Starlink incursion into radio bands used for radio astronomy.

If you download a satellite tracker app, it is possible to see dozens of satellites fly overhead on a clear evening. Satellites are visible after dusk or before dawn, but when the sun’s rays still pass overhead, illuminating the satellite. Later during the night, any satellites overhead are within the Earth’s shadow and are effectively invisible. They are tiny, extremely far away, and moving very quickly. It is not impossible that they could occult a distant star for less than a millisecond, but I think even detecting this would be a major headache.

Much of the concern over Starlink light pollution occurred because the first launch’s plane was closely aligned to the Earth’s terminator, meaning that night after night Europe, which was in summer, got a great view of the satellites passing overhead during evening twilight. Further, simulations based on early FCC filings showed that satellites orbiting at 1150km would be visible even after the end of astronomical twilight. There are three phases of twilight; civil, nautical, and astronomical, occurring when the sun is respectively 6, 12, and 18 degrees below the horizon. At the end of astronomical twilight, the sun’s rays are about 320 km from the surface at the zenith, well beyond the atmosphere. I believe, based on the Starlink website, that all satellites will be deployed below 600km. In this case, satellites may be visible during twilight but not long after nightfall, greatly reducing the potential impact to astronomy.

The third concern is orbital debris. In a previous post, I pointed out that satellites and debris below 600km will deorbit within a few years due to atmospheric friction, greatly reducing the possibility of Kessler syndrome. I think SpaceX gets a lot of hate for wanting to launch thousands of satellites, as though their designers have never thought of debris. When I look at the details of the Starlink implementation, it is hard for me to imagine a better way of doing it for debris mitigation.

Satellites are deployed at 350km, then flown to their destination orbit using an onboard thruster. Any satellite that dies on launch will de-orbit within weeks, instead of lurking for thousands of years at a higher altitude. This deployment strategy includes free admittance testing. Further, the Starlink satellites are flat in cross section, guaranteeing a big increase in orbital drag if they lose attitude control.

It is not well known but SpaceX pioneered the use of alternative fixtures to frangibolts in space launch. Nearly every other rocket provider uses exploding bolts to deploy stages, satellites, fairings, etc, increasing the potential for orbital debris. SpaceX also deliberately de-orbits its upper stages instead of letting them float around forever, gradually degrading and disintegrating in the harsh space environment.

The final concern I will document here is the potential for SpaceX to replace the existing internet monopoly with another monopoly. In a competitive marketplace, SpaceX would already have a total monopoly on launch. Only rival governments’ desires for assured military access to space keeps their expensive and obsolete rockets, often built by big monopolistic defense contractors, in business.

It is certainly possible to imagine that by 2030, SpaceX launches 6000 of its own satellites annually, plus a few spy satellites for old times’ sake. SpaceX’s cheap and reliable satellites would sell “rack space” for instruments built by third parties. Any university that can build a space-rated camera could get it to orbit without also having to cover the expense of building the whole satellite platform. And as a result of this ubiquitous improved access to space, Starlink becomes synonymous with satellites as historical manufacturers fade into irrelevant obscurity.

There is precedent for far-seeing companies so dominating a new market that their product becomes synonymous with the concept. Hoover. Westinghouse. Kleenex. Google. Frisbee. Xerox. Kodak. Motorola. IBM.

Where this can become problematic is if the first company to market engages in anti-competitive practices to preserve its market share, although since President Reagan this is often legal. SpaceX could preserve its Starlink monopoly by forcing other constellation developers to launch on antique Soviet rockets. Similar actions by the United Aircraft and Transportation company, as well as price fixing on mail routes, led to its break up in 1934. Fortunately it’s unlikely that SpaceX will preserve an absolute monopoly on fully reusable rockets forever.

More worryingly, SpaceX’s deployment of tens of thousands of satellites into LEO could be construed as a co-option of the commons. Those previously public, unowned orbital slots will be permanently occupied by a private company for private profit. While it is true that SpaceX’s innovations provided the mechanism to commercialize what was previously unremarkable vacuum, much of SpaceX’s intellectual capital was built upon billions of dollars of publicly funded research.

On the one hand, we need laws to protect the proceeds of private investment, research and development. Without this protection, innovators will be unable to finance ambitious projects or will move their companies to places that provide that protection. In either case, the public suffers from the loss of wealth generation. On the other hand, we need laws to protect the people, the nominal owners of common property including the sky, from rent-seeking private entities that would annex public wealth. Neither hand is correct, or even possible, on its own. The development of Starlink affords us all the opportunity to find our way to the safe middle ground for this new market. We will know we have found it when we’ve maximized the rate of innovation and common wealth creation.

Final thoughts

I wrote this blog immediately after I finished my post on Starship. It’s been a big week. Both Starship and Starlink are transformative technology being built before our very eyes, here, in our lifetimes. If I live long enough my grandchildren will be more flabbergasted that I’m older than Starlink than that I’m older than cell phones (museum pieces) or the public internet itself.

Satellite internet for the rich or warlike has been around for a long time. But a ubiquitous, generic, and cheap Starlink is not possible without Starship.

Launch has been around for a long time, but Starship, a launcher cheap enough to be interesting, is not possible without Starlink.

Human spaceflight has been around for a long time, and provided you’re a fighter jet pilot who is also a brain surgeon, you can go. With Starship and Starlink, human space exploration has a credible, near term path from orbital outpost to fully industrialized cities in deep space.

Damn, wishing I would be out there…

LikeLiked by 1 person

I believe in SpaceX and all it entails flight to Mars and beyond Elon musk has ambitions that are bigger than this world so happy he can show us the way looking forward to new things to come from SpaceX and other divisions of his companies

LikeLike

The toroidal properties of this satellite constellation are discussed in some detail in the papers at: http://lloydwood.users.sourceforge.net/Personal/L.Wood/satellite-constellations/double-surface-coverage/

It’s not just the intersatellite link handover that is constrained by the satellite movement, but what make sense for a ground terminal to be handed over to. A terminal bouncing between satellites in different orbital shells at different heights will see varying path latency, as will a terminal handed over from ascending to descending satellites. Wood’s ‘managing diversity’ paper lays some of this out.

LikeLiked by 1 person

Great link!

LikeLike

Do the rockets impact our ozone layer?

By sending up that many rockets with all their heat that is

LikeLike

I know its been a while since you asked this quesiton, but Everyday Astronaut takes a good look at this issue in his recently released rocket pollotion video. https://everydayastronaut.com/rocket-pollution/

LikeLiked by 1 person

Your $1/GB assumption seems pretty wildly optimistic. Broadband pricjng in the US (not a cheap market by any means) are already $0.15-0.20/GB, and dropping 30% or more per year.

LikeLike

Some pricing analysis for Starlink at

http://tmfassociates.com/blog/2019/12/12/reality-and-hype-in-satellite-constellations/

LikeLike

Based this on cell phone data rates.

LikeLike

A very well written article with great fermi estimates!

It would be great if you can also include your thoughts on how starlink is different from already existing satellite internet companies such as ViaSat and HughesNet.

LikeLiked by 1 person

I am hoping SpaceX will do a “Reality TV Show” about it’s manned exploration and colonization of the Moon and Mars — and make it an exclusive of Starlink subscription. I would subscribe to Starlink if just for that alone. How many hundreds of millions of other people will feel the same?

LikeLike

Great article, well though out, lots of detail. Maybe next time for the amateur astronomer in me you could detail the design differences between Starlink and others regarding reflectivity for which lots of less aware folks get very hyper about. The flat square area of the bottom of the satellite, the fact that there is a single solar panel, that it extends upward rather than out to the sides, that SpaceX is already testing ways to reduce the albedo of the bottom surface of the satellite.

LikeLike

Now that SpaceX has launched its eighth Starlink mission (Just this morning) and approximately 7 months have gone by since your blog post, has anything significant changed in your analysis?

LikeLike

I think a few of my guesses have turned out be very lucky!

LikeLike

Folks: An update would be interesting because it seems that almost every original “thought” behind what constitutes a Starlink satellite changes with every launch. What seems to be happening is that either the cost math is completely off or the system capacity and “price per bit” is off. Taking away onboard processing, no crosslinks, fixed beams not “flexible antennas,” the “promise” of lasers in the future etc. suggest a rethink? And aside from some early filings and theoretical papers, all the rest of the info is coming from the Musk PR machine? All this is would be GREAT, but it sure seems like a lot of billions of a lot of time between here and something.

LikeLike

Do you have a ref?

LikeLike

Will bad weather such as raining and cloudy affect Starlink? Is there anyway around it?

LikeLike

More power!

LikeLike

Seems Starlink is doing well with the weather, if you look on the reddit about Starlink several users put good experiences with rain and snow.

LikeLike

I read this post several months ago and it was the first one help me to realized who big was Starlink, how ambitious. Now the beta started everyone seems to doing the same. Understand the huge implications of this sat constellation. I’d like sometime in the future you do an update. Regards.

LikeLike

Thank you for this wonderful piece! Such a joy to read even for a lay person! I had 3 questions, one of which was related to the legal aspects around privacy which to some extent is covered in your most recent post (tor like routing) although more color on something like whether the US 4th amendment search & seizure would apply to starlink intrigues me. Let me switch to the other 2 questions I had – I’d appreciate any thoughts or pointers you might have.

Antennas – you describe the limits of the antennas on the satellite. I’m curious about the antennas at the other end. Instead of dish like antennas they provide today, from a physics perspective would it be possible to embed antennas and associated signal processing (similar to an RF front end module and modem DSP/neural processor on a smartphone) on a solar tile and have effectively a large array of distributed processing on roofs? This would not only tie-in very nicely with their solar business but potentially unlock many new possibilities?

Astronomy – how about installing powerful telescopes on LEO satellites? Is this already a thing? Wouldn’t that benefit astronomy?

LikeLike

Interesting ideas.

I think phased array antennas get very difficult to make as they get bigger. They only need to be big enough to make a beam narrow enough to avoid other nearby satellites.

Maybe later Starlink satellites can carry cubesat payloads. Unsure if it would be worth the hassle.

LikeLike

Question on the astronomy problem: Assuming paying entities had the will, what could happen if a Hubble-sized budget ($10B) was expended to mass-produce telescopes and probes employing SpaceX launch and manufacturing techniques? Could we launch 100 Hubble-class observatories? 100 deep-space probes? Keep some opportunistic probes in orbit to send out to surprise visitors to our solar system?

LikeLike

All possible but worth actually following the science return matrix to ensure extra capacity is useful.

LikeLike

This Verge article says Starlink is a failure so far

https://www.theverge.com/22435030/starlink-satellite-internet-spacex-review

I’d be interested in your take on this

LikeLike

My take away was that it was a transformative technical achievement in beta and that the reviewer, who was misinformed on several key points such as the removability of the Ethernet cable, complained that the signal was obscured by trees and left the dish on the ground.

LikeLiked by 1 person

Starlink is a quite profitable business in space… What else could be profitable in orbit? As discussed in this blog manufacturing, asteroid mining and beaming energy to earth have no chance whatsoever.

What about computing? To keep up with the demand datacenters are sprouting all over the planet with corresponding energy needs. In some regions (eg Ireland) they use for ~20% of the electricity production. If this exponential growth keeps going it would be 100% in 2040 or so, clearly there is a problem.

I predict starlink will evolve to be a huge datacenter. Cheap energy out there, production is weightless and easily exportable (to earth or elsewhere). Starlink satellites already have some compute power, the next generation will have more. I see some issue to radiate the waste heat, nothing insurmountable though.

What do you think?

(I won’t be surprised if someone already got this idea, you maybe)

LikeLike

The Starlink project by SpaceX is nothing short of revolutionary. It’s awe-inspiring to think about how this initiative has the potential to transform the way we connect to the internet, especially in underserved areas.

LikeLike